https://youtu.be/p0TdBqIt3fg?si=n9hl3KGaYCpm0Cm_

Summary

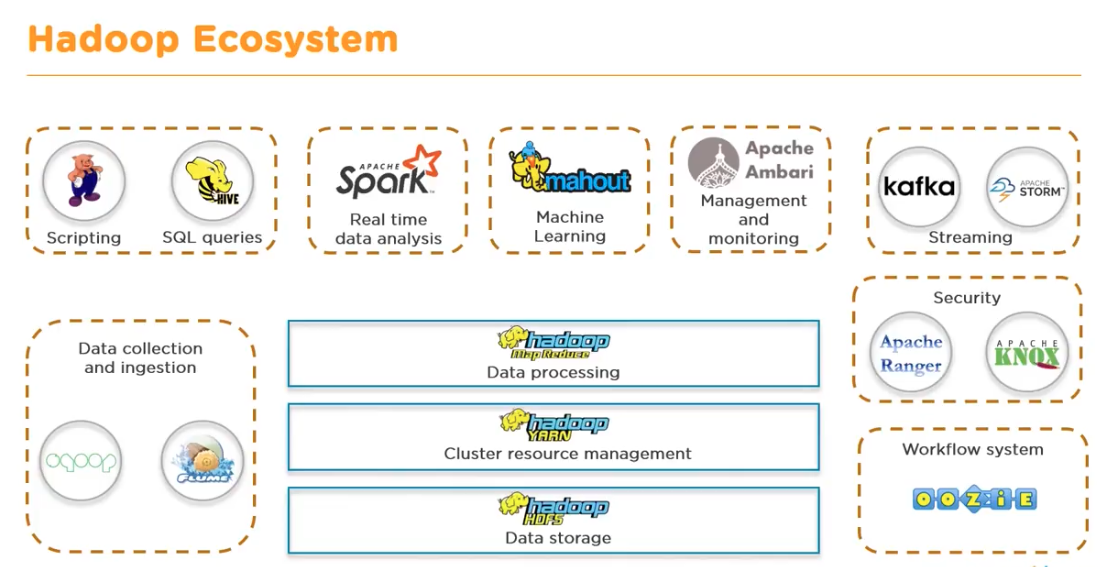

HDFS (Hadoop Distributed File System): Data storage

- Stores differenct formats of data on various machines

- 2 major components: Namenode(Master), Datanode(Slave)

- Splits the data into multiple blocks (128MB by default)

YARN (Yet Another Resource Negotiator): Cluster reource management

- Handles the cluster of nodes

- Allocates RAM, memory and other resources to differenct applications

- 2 major components: ResourceManager(Master), NodeManager(Slave)

MapReduce: Data Processing

- MapReduce processes large volumes of data in a parallelly distributed manner

Sqoop, Flume: Data collection and ingestion

- Sqoop is used to transfer data between Hadoop and external datastores such as relational databases and enterprise data warehouses

- Flume is distributed service for collecting, aggregating and moving large amounts of log data

Pig, Hive: Scripting and SQL

- Pig is used to analyze data in Hadoop. It provides a high level data processing language to perform numerous operations on the data

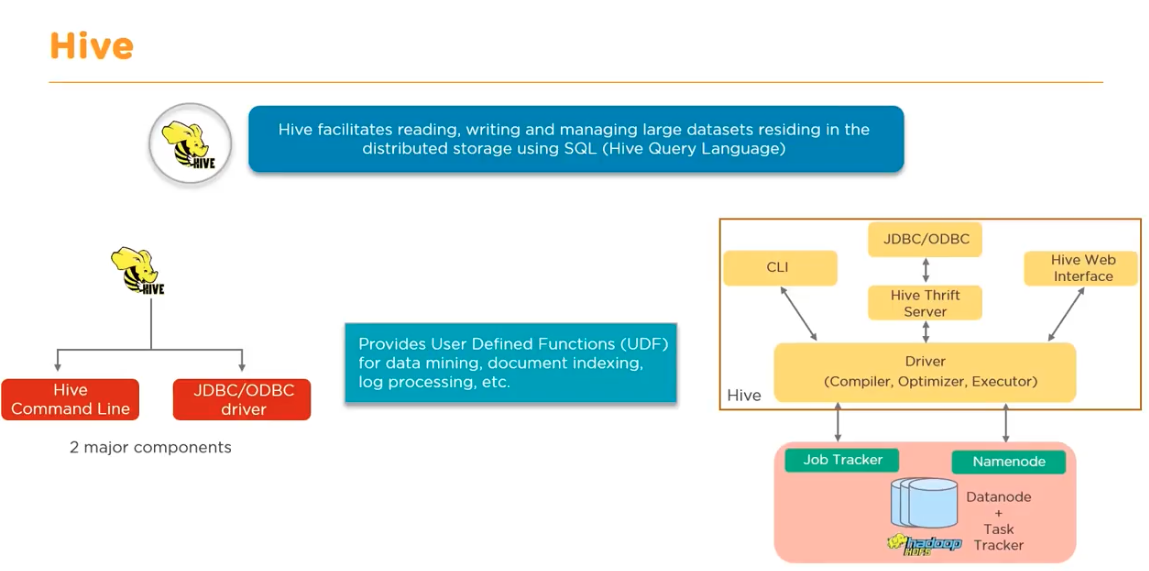

- Hive facilitates reading, writing and managing large datasets residing in the distributed storage using SQL (Hive Query Language)

- 2 Major components: Hive Command Line, JDBC/ODBC driver

- Provides User Defined Functions (UDF) for data mining, document indexing, log processing, etc.

Spark: Real time data analysis

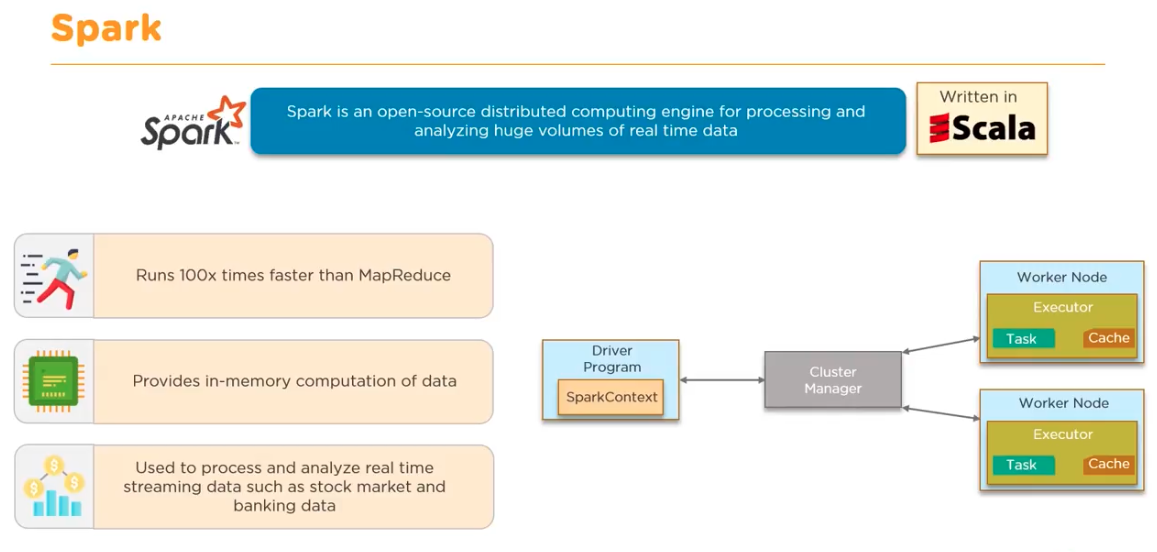

- Spark is an open-source distributed computing engine for processing and analyzing huge volume of real time data (written in Scala)

- Runs 100x times faster than MapReduce

- Provides in-memory computation of data

- Used to process and analyze real time streaming data such as stock market and banking data

mahout: Machine Learning

- alternative: PySpark

Ambari: An Apache open-source tool responsible for keeping track of running applications and their statuses

Kafka, Storm: Streaming

- Kafka is a distributed streaming platform to store and process streams of records (written in Scala, Java)

- Builds real-time streaming data pipelines that reliably get data between applications

- Builds real-time streaming applications that transforms data into streams

- Kafka uses a messaging system for transferring data from one application to another

- Storm is a processing engine that processes real-time streaming data at a very high speed (written in clojure)

- Ability to process over a milion jobs in a fraction of seconds on a node

- It is integrated with Hadoop to harness higher throughputs

Ranger, Knox: Security

Oozie: Workflow system

- Workflow scheduler system to manager Hadoop jobs

'Data Engineering' 카테고리의 다른 글

| [빅데이터를 지탱하는 기술] Ch2. 빅데이터의 탐색 (0) | 2025.03.12 |

|---|---|

| [빅데이터를 지탱하는 기술] Ch1. 빅데이터의 기초 지식 (2) | 2025.02.25 |

| [12주차] Airflow, EMR 스터디 (0) | 2022.05.08 |

| [AWS] Ubuntu EC2에서 python3 version 변경하기 (0) | 2022.04.30 |

| [AWS] VS Code에서 EC2 서버 접속하기 (0) | 2022.04.30 |